Agentic Design Patterns

The Rise of Agentic AI: A Framework for Scalable Intelligence

AI Agent Design Patterns are at the forefront of modern application development, enabling intelligent, interactive workflows powered by large language models (LLMs). As these patterns evolve, they redefine how we build systems that think, act, and adapt in real-time…

However, a more powerful approach is emerging: agent workflows. These systems enable LLMs to reason, act, observe outcomes, and iterate — often improving performance significantly by simulating cognitive loops similar to human problem-solving.

With the explosion of available tools and platforms, the AI agent landscape offers immense potential — but also increasing complexity. To help navigate this evolving space, we present a structured framework of six key design patterns that support the development of robust, scalable, and intelligent agentic applications.

These patterns are foundational building blocks for modern AI systems — and when combined effectively, they unlock powerful capabilities far beyond traditional prompt-based use.

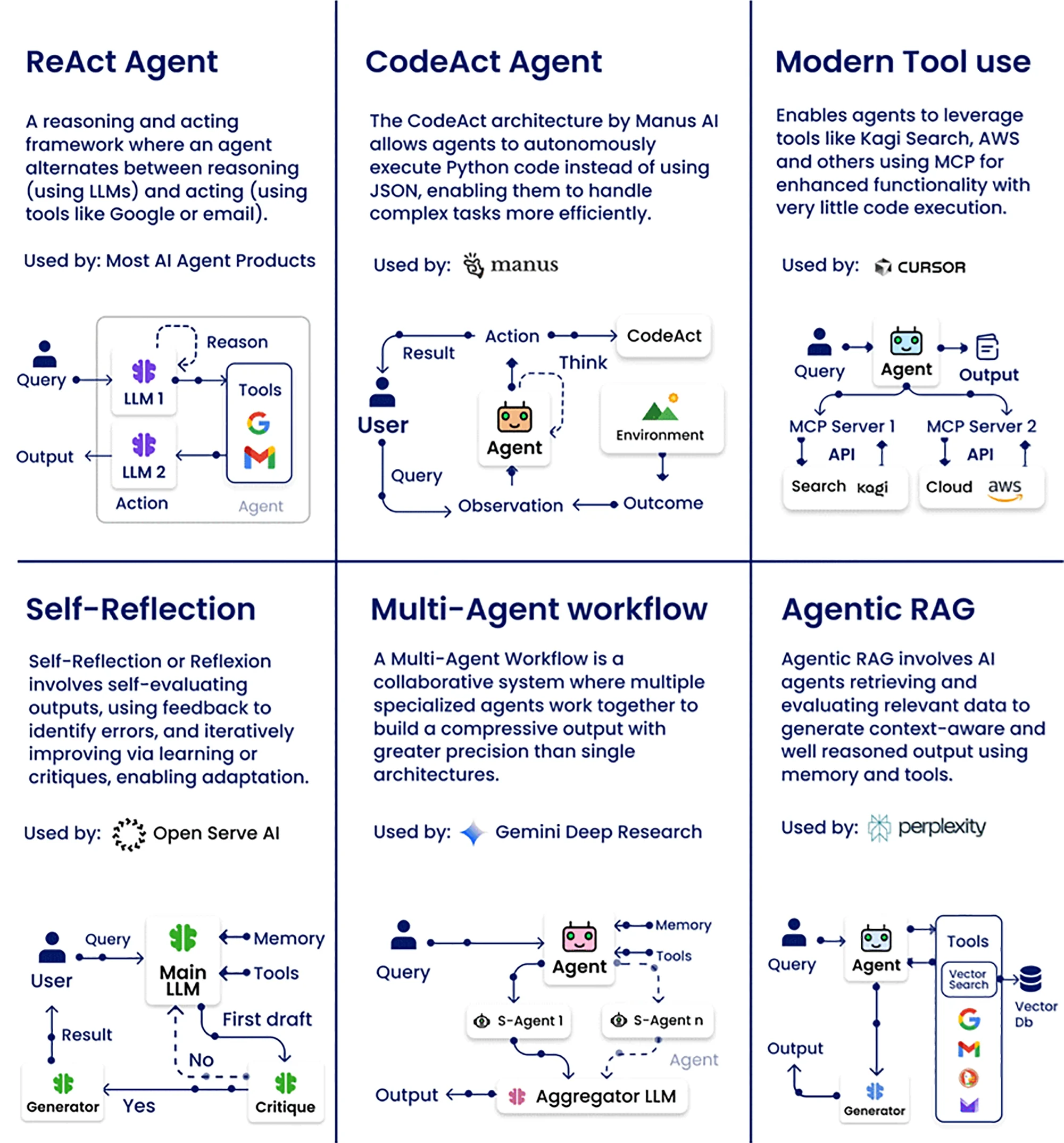

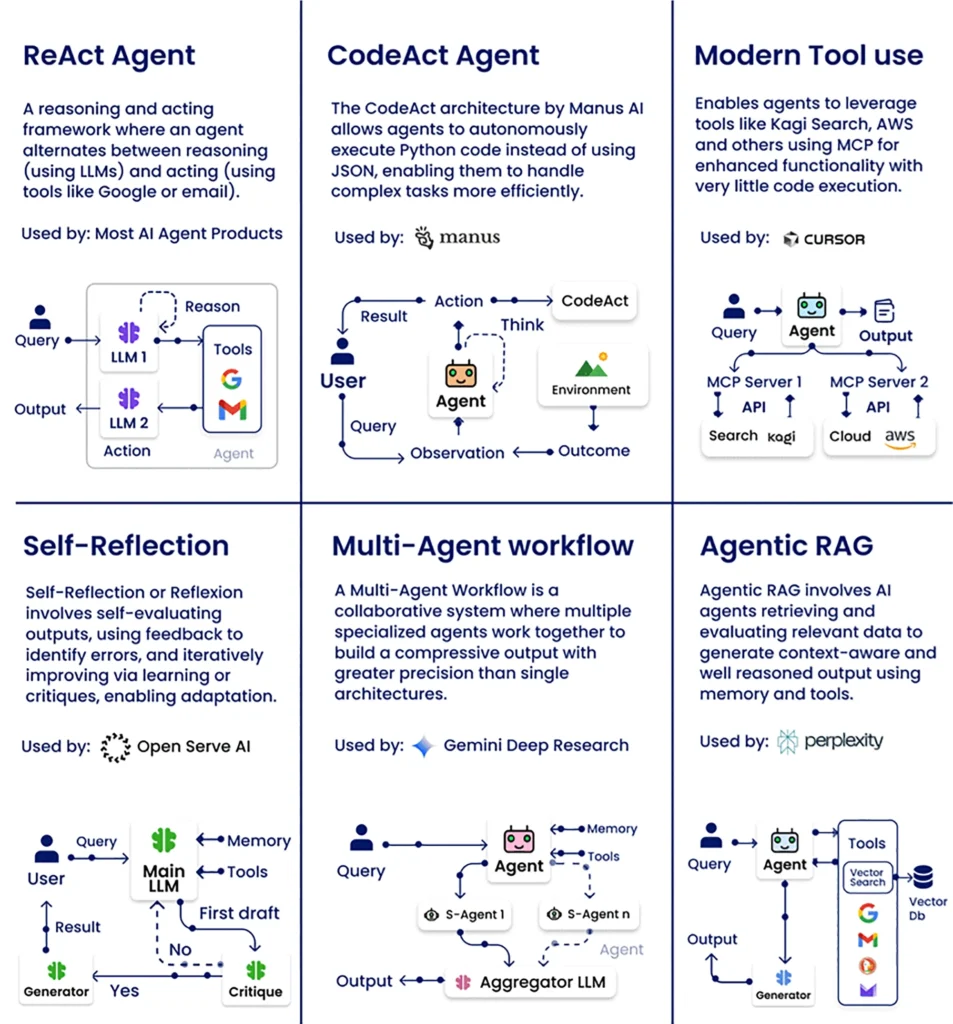

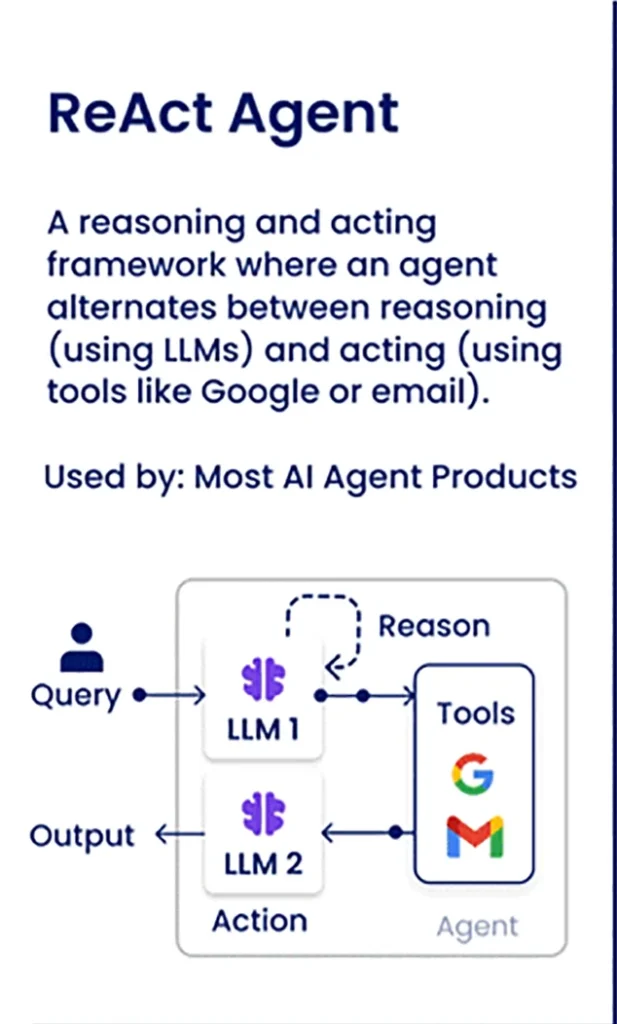

1. ReAct Agent

(REason + ACT)

Core Idea:

The ReAct pattern is a loop where the agent:

- Thinks about what to do (i.e., reasons),

- Acts (calls tools or APIs),

- Observes the outcome,

- Repeats until it can give a confident answer.

This creates a dynamic interaction loop instead of one-shot generation.

Workflow:

textCopyEditThought: I need to look up the current weather.

Action: Call Weather API

Observation: It's 15°C in London.

Thought: That's the info I need.

Answer: It's currently 15°C in London.

Pros:

- Mimics human step-by-step problem solving

- Reduces hallucination by checking results

- Very flexible for open-ended tasks

Cons:

- Can be slow due to many steps

- Requires robust observation parsing

Use Cases:

- Search-driven assistants (e.g., GPT + tools)

- Web automation

- Planning tasks with conditional decisions

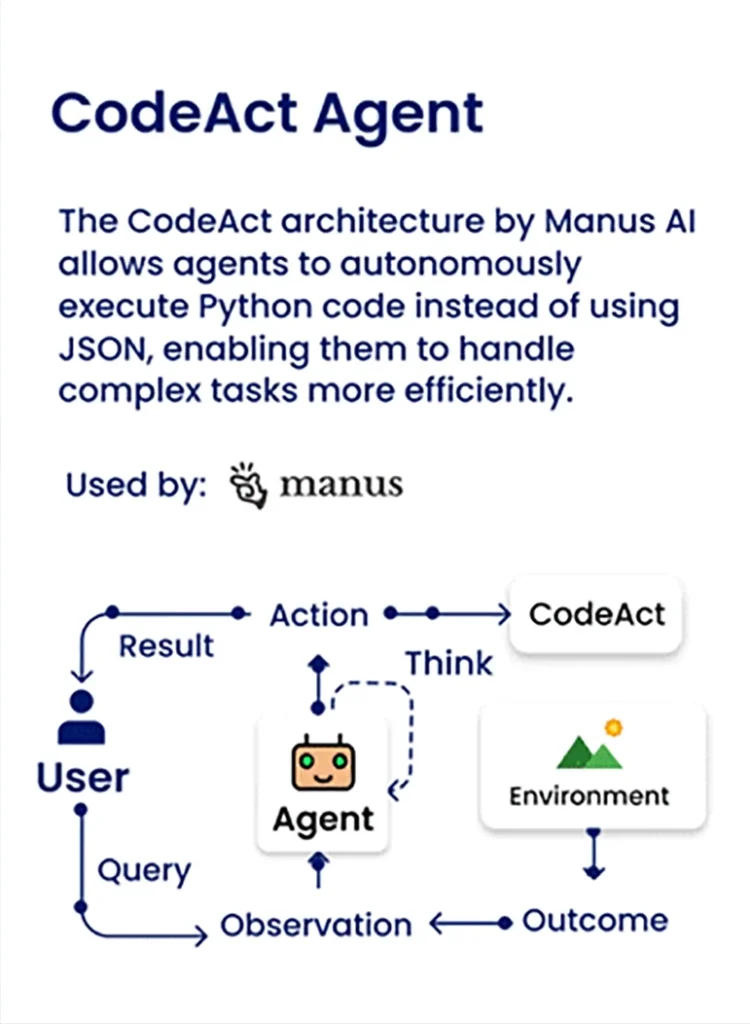

2. CodeAct Agent

(Code + Execute)

Core Idea:

The agent generates actual code (e.g., Python, SQL, Bash), then executes it to get results — far more powerful than just reasoning in natural language.

Workflow Example:

pythonCopyEdit# Input: “What’s the average of [12, 15, 22]?”

Agent writes:

nums = [12, 15, 22]

average = sum(nums) / len(nums)

print(average)

Pros:

- Enables real computation and logic

- Highly expressive: you can do anything code can do

- Easily integrates with existing systems and APIs

Cons:

- Needs secure sandboxing to prevent malicious or unsafe code

- Errors in code generation must be caught and handled

Use Cases:

- Data analysis assistants

- Developer tools (e.g., GitHub Copilot, Code Interpreter)

- Scientific research agents

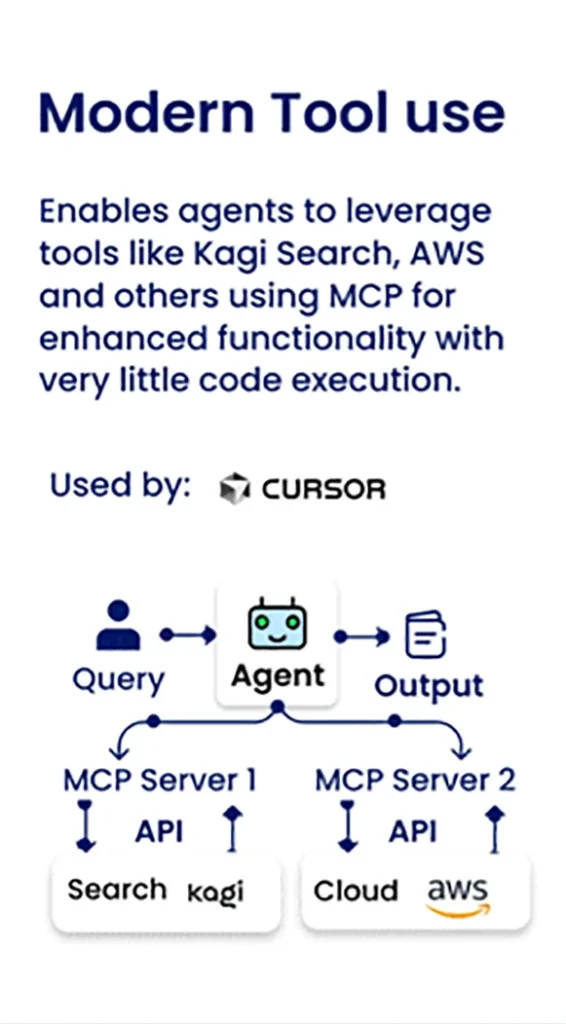

3. Modern Tool Use

(Smart Routing to APIs/Services)

Core Idea:

The agent doesn’t do the work itself — it delegates to external APIs and tools (like Google Search, Snowflake, Slack, Notion). The agent’s job is coordination.

Analogy:

Think of it as a smart middle-layer — the agent decides what tool to use and sends/receives data in the right format.

Pros:

- Highly scalable and efficient

- Leverages the strengths of each specialized tool

- Ideal for real-world, business-grade agents

Cons:

- Requires tool integration and maintenance

- Parsing tool outputs reliably is critical

Use Cases:

- AI dashboards (agent talks to data APIs)

- Enterprise chatbots

- CRM or ERP automation

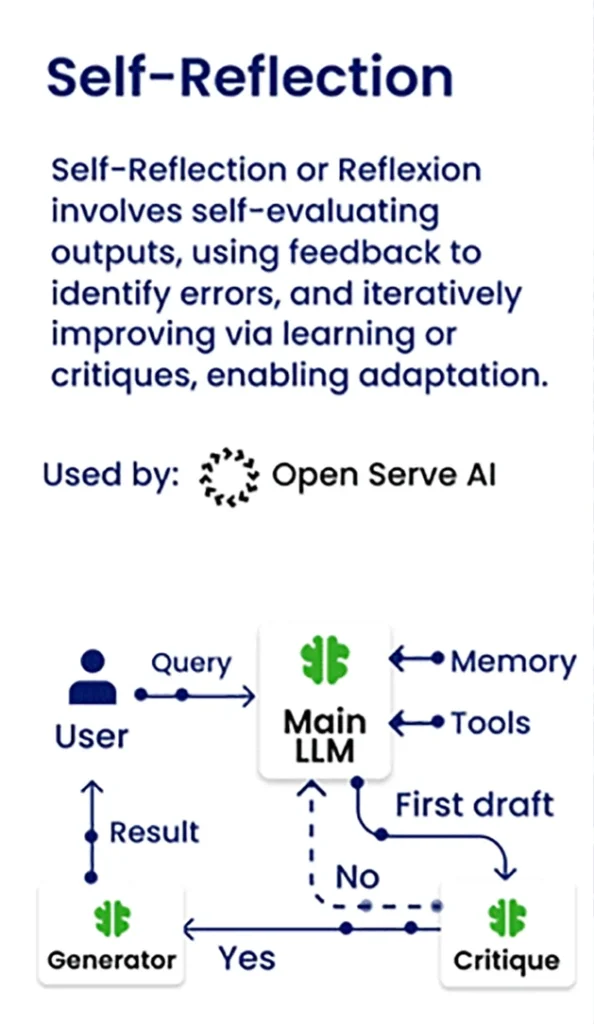

4. Self-Reflection

(Critique + Retry)

Core Idea:

The agent reviews its own output, looks for mistakes, critiques itself, and retries with improvements. Adds a feedback loop.

Workflow:

textCopyEditFirst Answer: “Einstein invented the lightbulb.”

Reflection: “That’s factually incorrect — it was Edison.”

Revised Answer: “Thomas Edison invented the lightbulb.”

Pros:

- Boosts factual correctness

- Reduces hallucinations

- Adds explainability (it shows how it caught errors)

Cons:

- Slower performance

- Needs high-quality critique prompts or critic agents

Use Cases:

- Content generation (blog, legal, policy)

- Coding agents

- Scientific and academic assistants

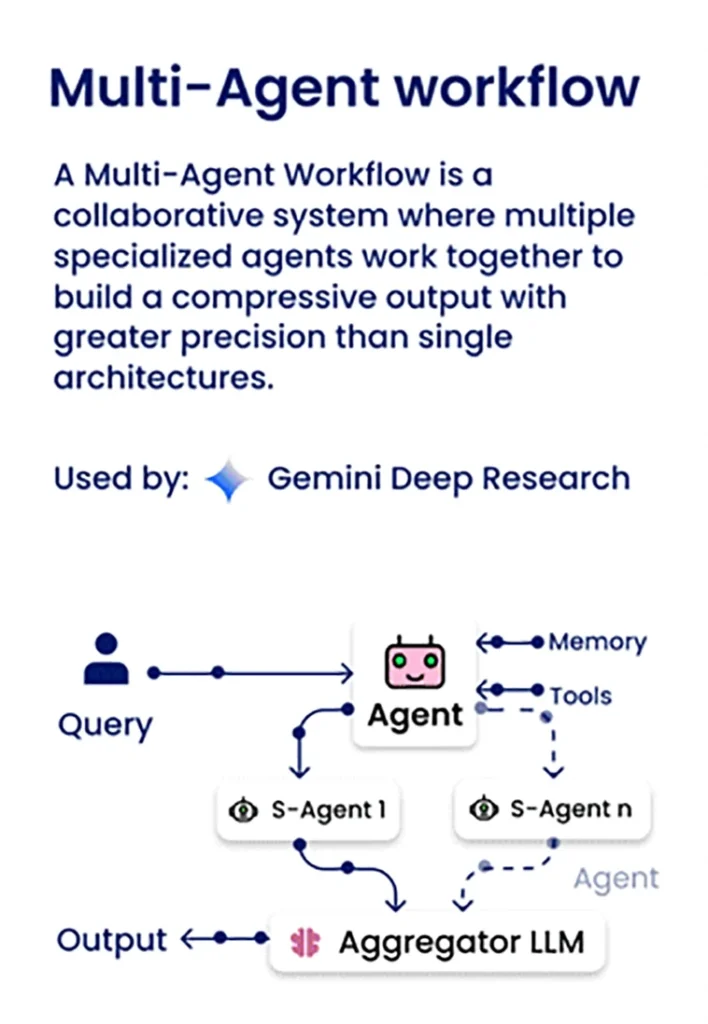

5. Multi-Agent Workflow

(Roles + Collaboration)

Core Idea:

Instead of one big general agent, you define specialized agents (Planner, Researcher, Writer, Critic, etc.) that work together.

Example:

- Planner: decides tasks

- Researcher: fetches data

- Writer: drafts report

- Reviewer: checks logic

Pros:

- Modular and composable

- Easier to scale or improve specific roles

- Resembles human team workflows

Cons:

- Requires communication protocols (message passing)

- Can become complex to orchestrate

Use Cases:

- Long-form document creation

- Workflow bots

- Research pipelines

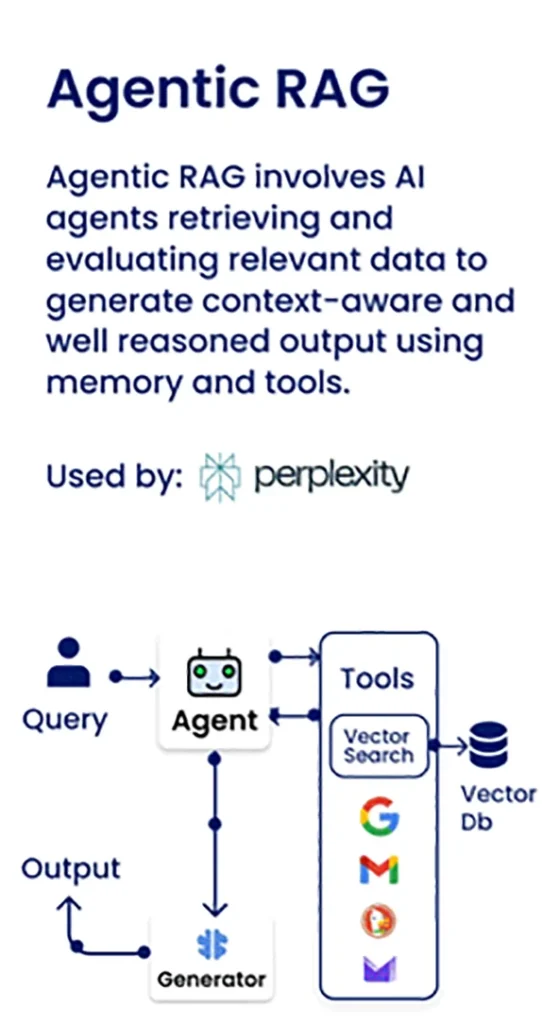

6. Agentic RAG

(Retrieval-Augmented Generation + Agent Behavior)

Core Idea:

Traditional RAG = Retrieve documents → Feed to LLM → Generate output.

Agentic RAG = Agent decides what to retrieve, how to use it, which tools to apply, and how to compose final output.

Steps:

- Search for documents

- Choose relevant ones

- Read, analyze, combine

- Answer based on context

Pros:

- Combines fresh data with reasoning

- Powers real-time apps (news, research, legal)

- Great for factual tasks

Cons:

- Retrieval quality matters (garbage in = garbage out)

- Needs system design to structure the search pipeline

Use Cases:

- Perplexity.ai, ChatGPT Browsing

- Real-time news or academic assistants

- Legal research agents

Summary Table

| Pattern | Key Function | Best For |

|---|---|---|

| ReAct | Think → Act → Observe Loop | Search tasks, planning agents |

| CodeAct | Generate & run code | Data, dev tools, computation-heavy tasks |

| Modern Tool Use | Route to APIs/tools | Enterprise assistants, data interfaces |

| Self-Reflection | Critique & revise | Factual correctness, coding, writing |

| Multi-Agent Workflow | Role-based collaboration | Reports, multi-step planning |

| Agentic RAG | Retrieve → Analyze → Answer | Real-time Q&A, research, dynamic facts |

FAQ

What is the difference between an AI Agent and a standard LLM prompt?

A standard LLM prompt is a single, static input that produces a one-time response. An AI Agent, by contrast, can think, act, observe, reflect, and revise — creating a dynamic, iterative process. Agents often use tools, write code, retrieve documents, or coordinate multiple steps, going far beyond simple prompt-response behavior.

Do these agent patterns replace prompts entirely?

Not at all. Prompts are still essential — but they become components within a larger system. Each pattern leverages prompt engineering in specific ways, especially in decision-making, tool selection, or reflective reasoning.

Can I combine multiple patterns in a single agent?

Yes — and in practice, you should. These patterns are modular, not mutually exclusive. For example, a Multi-Agent Workflow may include a CodeAct agent that uses Self-Reflection. The best agents are often composed from 2–4 patterns working together.

What tools or frameworks help implement these patterns?

Popular frameworks that support agentic design patterns include:

- LangChain (Python/JS): Tool routing, RAG, planning

- AutoGen (Microsoft): Multi-agent workflows

- CrewAI: Role-based agent coordination

- OpenAgents: For researchers/developers

- Haystack, LlamaIndex: RAG-centric architectures

Is ReAct the same as Chain of Thought (CoT)?

No. Chain of Thought (CoT) refers to internal reasoning only (“think step-by-step”). ReAct combines CoT with external actions (like calling tools or APIs), creating a reason–act–observe loop.

How is Agentic RAG different from traditional RAG?

Traditional RAG simply retrieves documents and sends them to an LLM for output. Agentic RAG adds intentionality: the agent decides what to retrieve, how to analyze, what tools to apply, and whether more steps are needed. It’s an interactive, decision-driven RAG system.

What are the biggest challenges in building agentic systems?

- Tool integration complexity

- Error handling (especially in CodeAct and Self-Reflection)

- Latency/performance trade-offs

- Agent coordination (in Multi-Agent workflows)

- Security and sandboxing for code execution

How do I make agents safe and reliable?

- Use sandboxed environments for code execution.

- Validate tool outputs and use fallback logic.

- Incorporate self-reflection or reviewer agents.

- Log all decisions for transparency and debugging.

Do these patterns apply to non-technical industries?

Yes! These design patterns are already being applied in:

- Finance (report generation, forecasting)

- Legal (document review, case search)

- Healthcare (decision support, triage bots)

- Education (intelligent tutoring systems)

- Sales/CRM (assistant dashboards, summarization)

Where should I start if I’m new to building agents?

Start small:

- Try LangChain + OpenAI API to build a ReAct or Tool-Use agent.

- Use Code Interpreter (ChatGPT Plus) to experiment with CodeAct patterns.

- Read Andrew Ng’s Agentic Design series and GitHub examples.

Then iterate — just like an agent would.

Find us

Balian’s Blogs Balian’s

linkedin Shant Khayalian

Facebook Balian’s

X-platform Balian’s

web Balian’s

Youtube Balian’s

#AIAgents #AgenticAI #LLMArchitecture #AIWorkflows #AIEngineering #PromptEngineering #AutonomousAgents #DesignPatterns #AgentDesign #CognitiveArchitecture #HumanAIInteraction #AIDevelopment #AIProductDesign #AIIntegration #AIInnovation #FutureOfAI #AIFrameworks