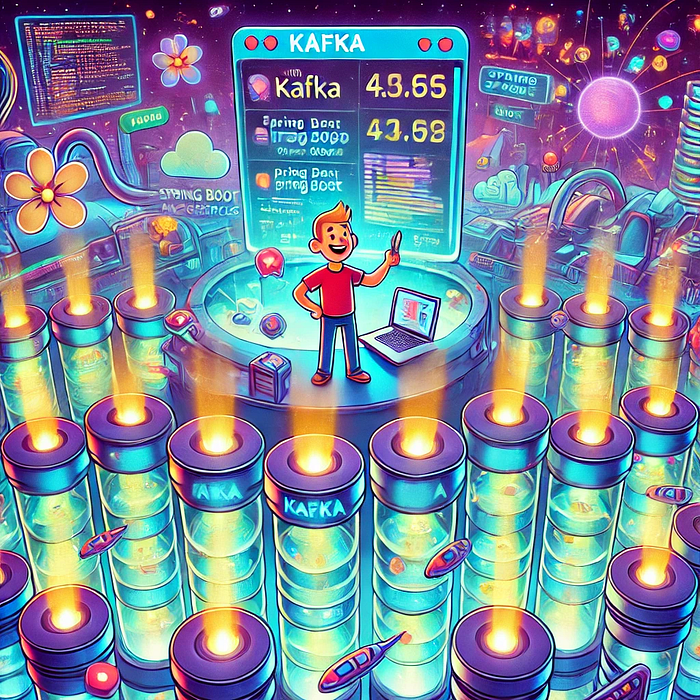

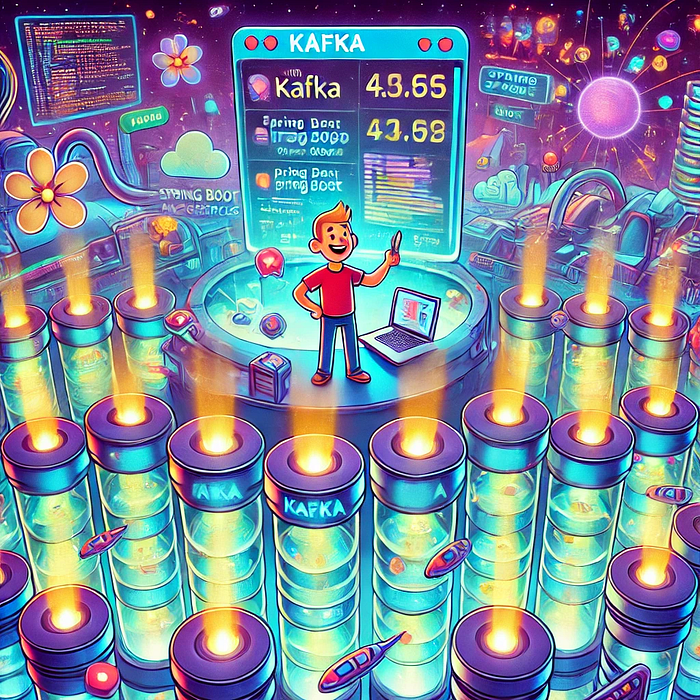

Handling 1 Million Messages per Second with Kafka & Spring Boot

Views: 0

Mission: Real-Time Throughput at Scale

Bob, our favorite builder, now works at a fast-growing fintech company. His task? Build a real-time transaction processor that can handle 1 million messages per second.

This isn’t your typical “Hello World” microservice. This is the real deal — Kafka + Spring Boot + production-grade tuning.

What You’ll Learn

✔ Kafka architecture that supports million-scale throughput

✔ Spring Boot Kafka producer/consumer tuning

✔ Partitioning, batching, compression, and parallelism

✔ Real-world deployment and performance benchmarks

1 Understanding the Challenge

Bob’s system needs to:

- Process 1 million events per second

- Guarantee low latency (<10ms)

- Be resilient to failures

- Scale horizontally without falling over

He chooses Apache Kafka for its distributed log-based architecture and high-throughput streaming capability.

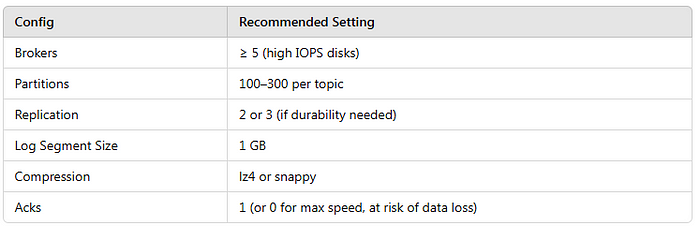

2 Kafka Setup: Scaling with Partitions & Brokers

Bob starts with Kafka cluster design.

Real-Life Analogy:

Think of each Kafka partition as a checkout counter at a supermarket. The more counters, the more customers (messages) you can serve in parallel.

Kafka Tuning for 1M/s

3 Spring Boot Kafka Producer Setup

Bob configures his Kafka producers to optimize performance.

application.yml

spring:

kafka:

producer:

batch-size: 32768

buffer-memory: 67108864

compression-type: lz4

acks: 1

linger-ms: 10

retries: 1TransactionEventProducer.java

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

public void send(String topic, String message) {

kafkaTemplate.send(topic, message);

}Tip: Use ProducerRecord if you want to control partitioning manually.

4 Spring Boot Kafka Consumer Setup

Bob now sets up high-speed consumers.

application.yml

spring:

kafka:

consumer:

group-id: high-speed-consumers

max-poll-records: 1000

fetch-min-size: 50000

fetch-max-wait: 500

enable-auto-commit: falseTransactionEventListener.java

@KafkaListener(topics = "transactions", concurrency = "10")

public void consume(String message) {

// High-speed processing logic

}✔ Set concurrency = number of partitions / cores to scale thread processing.

5 Batching, Compression, and Parallelism

To push the system toward 1M messages/sec, Bob applies:

- Batch sending (linger.ms + batch.size)

- Compression (lz4) reduces network IO

- Consumer concurrency to leverage all CPU cores

- Message keying for even partition distribution

Use Avro or Protobuf instead of JSON to save 40–70% in message size.

6 Benchmarks: Real-World Results

Bob deploys his system in Kubernetes using:

- Kafka (3 brokers, 100 partitions)

- 3 Producer pods, 6 Consumer pods

- 8 vCPU, 16 GB RAM per pod

Results:

- ~1.05 million messages/sec sustained

- < 10 ms average latency

- 99.99% delivery success with retries + acks=1

7 Real-World Tools & Monitoring

Bob integrates:

- Prometheus + Grafana: For Kafka and Spring metrics

- Kafka Manager / Kowl: To inspect topic health

- Loki + Fluentd: For log aggregation

✔ Track:

- Lag per partition

- Consumer group offsets

- Producer throughput

- JVM memory and GC

Find us

linkedin Shant Khayalian

Facebook Balian’s

X-platform Balian’s

web Balian’s

Youtube Balian’s

#kafka #springboot #highthroughput #realtimesystems #messagingarchitecture #scalablesystems #microservices #javaperformance #eventdrivenarchitecture