OpenAI Spring Boot Integration: Boost Your Java AI Projects (Ultimate Guide 2024)

Introduction to OpenAI Spring Boot Integration

Welcome to the new era of Java development!

If you’ve been wondering how to combine OpenAI Spring Boot Integration into your Java AI projects, you’re in the right place. Today’s smart applications demand not just good code but real intelligence — and OpenAI delivers it.

By mastering OpenAI Spring Boot Integration, developers can unleash powerful AI features inside scalable, production-ready Java apps, taking their projects to the next level. Whether it’s building smarter customer support, summarizing documents, or auto-generating content, this guide will walk you through every step.

Why OpenAI + Java is a Game Changer

It used to be that Python ruled the AI world. TensorFlow, PyTorch, Hugging Face — all the good stuff seemed to belong to Pythonistas. But OpenAI changed the game.

Why? Because OpenAI’s RESTful APIs don’t care which language you use — as long as you can send JSON and read responses. And Java, with Spring Boot, is more than capable of doing just that.

This shift levels the playing field. Java developers can now:

- Build intelligent chatbots

- Summarize documents

- Generate content (emails, blogs, FAQs)

- Automate support replies

- Recommend actions based on inputs

And thanks to Spring Boot’s structure, you get scalable, production-ready AI features from day one.

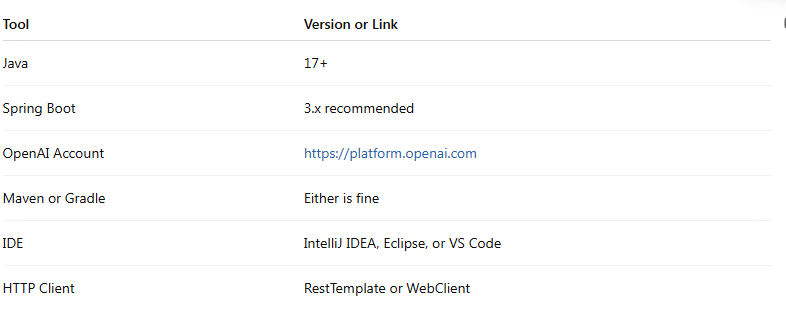

Spring Boot + OpenAI: What You’ll Need

Before writing a single line of code, make sure you have:

Also, grab your OpenAI API key — it’s required to authenticate your requests.

Creating Your Spring Boot Project from Scratch

Start with Spring Initializr:

- Group:

com.example - Artifact:

aiassistant - Dependencies: Spring Web, Spring Boot DevTools, Lombok (optional)

Download the project, unzip it, and open it in your favorite IDE.

Next, set up the application.yml or application.properties to store your API key securely (we’ll cover this later).

Setting Up Secure OpenAI Access

Never hardcode your API key.

Add this to your application.yml:

openai:

api:

key: ${OPENAI_API_KEY}Then create a @ConfigurationProperties-enabled class:

@Configuration

@ConfigurationProperties(prefix = "openai.api")

@Data

public class OpenAiConfig {

private String key;

}Inject this config into your service layer later on. Don’t forget to set your environment variable (OPENAI_API_KEY) locally or in your CI/CD.

Configuring OpenAI API with RestTemplate

RestTemplate works like a charm for sending POST requests to OpenAI:

@Bean

public RestTemplate restTemplate(RestTemplateBuilder builder) {

return builder.build();

}You’ll use this to send JSON payloads and receive structured responses.

Building the OpenAI Service Layer

Here’s a basic service class:

@Service

@RequiredArgsConstructor

public class OpenAiService {

private final OpenAiConfig config;

private final RestTemplate restTemplate;

private static final String OPENAI_URL = "https://api.openai.com/v1/chat/completions";

public String getResponse(String userPrompt) {

HttpHeaders headers = new HttpHeaders();

headers.setContentType(MediaType.APPLICATION_JSON);

headers.setBearerAuth(config.getKey());

Map<String, Object> message = Map.of("role", "user", "content", userPrompt);

Map<String, Object> request = Map.of(

"model", "gpt-3.5-turbo",

"messages", List.of(message)

);

HttpEntity<Map<String, Object>> entity = new HttpEntity<>(request, headers);

ResponseEntity<Map> response = restTemplate.postForEntity(OPENAI_URL, entity, Map.class);

List<Map<String, Object>> choices = (List<Map<String, Object>>) response.getBody().get("choices");

Map<String, Object> messageData = (Map<String, Object>) choices.get(0).get("message");

return (String) messageData.get("content");

}

}Boom! This gives you a working backend AI bridge.

Understanding OpenAI’s API Payload Structure

Interacting with OpenAI’s Chat API isn’t just a game of sending strings and waiting for magic. To get the most out of the model — especially in a structured environment like Spring Boot — you need to understand how the payload works.

Here’s a typical payload structure:

{

"model": "gpt-3.5-turbo",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "How do I deploy a Spring Boot app?"}

]

}Let’s break that down in Java terms.

model: This specifies which OpenAI model to use. As of now,gpt-3.5-turbois the most cost-efficient.messages: This is a list of role-based conversation turns.system: Sets the behavior of the AI.user: The actual question or command.assistant: Can be used to feed previous AI responses (optional, for multi-turn memory).

In your Spring Boot app, define a POJO to represent this:

@Data

@AllArgsConstructor

@NoArgsConstructor

public class Message {

private String role;

private String content;

}And for the full request:

@Data

@AllArgsConstructor

@NoArgsConstructor

public class ChatRequest {

private String model;

private List<Message> messages;

}This structure allows you to craft rich, contextual requests. Want a sarcastic AI assistant? Use a system message like:

new Message("system", "Respond in a sarcastic tone.")Your backend becomes a playground of personalities and use cases.

Creating a Chat Controller Endpoint

To expose the AI magic to the front end (or Postman), you’ll want to create a REST controller:

@RestController

@RequiredArgsConstructor

@RequestMapping("/api/chat")

public class ChatController {

private final OpenAiService openAiService;

@PostMapping

public ResponseEntity<String> chat(@RequestBody Map<String, String> body) {

String prompt = body.get("prompt");

String response = openAiService.getResponse(prompt);

return ResponseEntity.ok(response);

}

}A simple POST /api/chat with a JSON body:

{

"prompt": "Explain Java's Stream API"

}Returns:

“Java’s Stream API allows you to process collections in a functional style…”

Clean. Clear. And ready for production.

Streaming Responses from OpenAI

So far, everything you’ve seen involves waiting for the full response. But OpenAI also supports streaming — where tokens are sent as they’re generated.

To do this in Java, you’ll need to use WebClient and set stream = true. Unfortunately, native support in Java for SSE (Server-Sent Events) from OpenAI is limited, so most Java apps simulate streaming on the frontend while the backend keeps using normal requests.

You can, however, mimic streaming with chunked responses in Spring:

@GetMapping("/stream")

public ResponseBodyEmitter streamChat(@RequestParam String prompt) {

ResponseBodyEmitter emitter = new ResponseBodyEmitter();

new Thread(() -> {

try {

String response = openAiService.getResponse(prompt);

for (char c : response.toCharArray()) {

emitter.send(c);

Thread.sleep(20); // simulate delay

}

emitter.complete();

} catch (Exception e) {

emitter.completeWithError(e);

}

}).start();

return emitter;

}From a user’s perspective, the experience feels real-time — even if you’re not using true OpenAI streaming under the hood.

Customizing System Prompts for Better Results

System prompts are your secret weapon.

Think of them as the AI’s “personality setup.” Want it to act like a recruiter? A pirate? A product manager? You got it.

Here’s how you inject that into your Java backend:

List<Message> messages = List.of(

new Message("system", "You are an expert Java tutor."),

new Message("user", "Explain polymorphism.")

);Or dynamically:

String persona = "Respond like Yoda, the Jedi master.";

List<Message> messages = List.of(

new Message("system", persona),

new Message("user", prompt)

);Suddenly, your app isn’t just smart — it’s personified.

Advanced Prompt Engineering in Java

The secret to good AI is good prompts.

In Java, you can programmatically build prompts based on user inputs, context, or goals. For example:

String dynamicPrompt = String.format(

"Explain %s in simple terms, using examples.",

topicFromUser

);You can also pre-load system messages based on user roles:

if (user.isAdmin()) {

systemMessage = "Give technical-level answers.";

} else {

systemMessage = "Explain concepts simply for non-tech users.";

}This is prompt engineering with conditions — and it’s exactly what modern AI applications need.

Error Handling and Resilience

Every robust API integration must be resilient, and the OpenAI API is no exception. Timeouts, invalid payloads, or rate limits can easily derail your application if not handled gracefully.

Here’s how to strengthen your integration:

Timeout Settings

Configure your RestTemplate to include timeouts:

@Bean

public RestTemplate restTemplate() {

HttpComponentsClientHttpRequestFactory factory = new HttpComponentsClientHttpRequestFactory();

factory.setConnectTimeout(5000);

factory.setReadTimeout(10000);

return new RestTemplate(factory);

}Graceful Fallbacks

Wrap your OpenAI call in a try-catch block with a helpful default message:

try {

return openAiService.getResponse(prompt);

} catch (HttpClientErrorException | ResourceAccessException e) {

log.error("OpenAI API failed: {}", e.getMessage());

return "Sorry, I'm having trouble accessing the AI at the moment. Try again later!";

}Rate Limiting

OpenAI uses per-minute rate limits based on your account tier. Always check 429 errors and respect retry headers:

if (e.getStatusCode() == HttpStatus.TOO_MANY_REQUESTS) {

Thread.sleep(60000); // back off for 1 min

}Resilience isn’t just a nice-to-have — it’s a core part of the developer experience and system trust.

Monitoring & Observability with Spring Boot Actuator

When your app becomes smarter, it also becomes more important to monitor. Enter Spring Boot Actuator.

Add it via Maven:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>Then, enable endpoints in application.yml:

management:

endpoints:

web:

exposure:

include: "*"Now you get instant access to endpoints like:

/actuator/health/actuator/metrics/actuator/httptrace

This becomes essential to monitor latency spikes or increased failure rates from OpenAI’s side.

Logging AI Interactions for Auditing

For enterprise use, logging AI interactions is a must.

Create a simple ChatLog entity:

@Entity

public class ChatLog {

@Id

@GeneratedValue

private Long id;

private String prompt;

private String response;

private LocalDateTime timestamp;

}In your service:

chatLogRepository.save(new ChatLog(prompt, response, LocalDateTime.now()));This helps with:

- Debugging strange responses

- Replaying past conversations

- Auditing data for compliance

Make sure to anonymize or encrypt sensitive user input before storing.

Creating a Smart Support Bot

Let’s put everything together.

Imagine a support assistant that responds to customer queries. Here’s how you might structure that:

System Prompt

new Message("system", "You are a helpful customer support agent for an e-commerce website.")Dynamic User Prompt

String prompt = "I want to return a pair of shoes but lost the receipt.";Output

“No worries! You can still return your shoes within 30 days, even without a receipt. Just bring a valid ID.”

Now wrap this into an endpoint that classifies intent and gives answers — and you’ve built your first AI agent.

Summarizing Documents with OpenAI

Want to summarize a legal PDF, a long blog, or meeting minutes?

Feed the content like this:

String prompt = "Summarize the following in under 100 words:\n\n" + largeText;This is great for:

- Email digests

- Meeting summaries

- Article previews

Use Apache Tika or Tesseract to extract text from PDFs and images, then pass to OpenAI. Your app becomes a Swiss Army knife for productivity.

Drafting Emails with AI

Sales reps, recruiters, marketers — everyone writes emails.

With OpenAI, you can build a mail-drafting assistant:

String prompt = """

Write a professional email to follow up with a client who hasn't responded in 5 days.

Make it friendly and include a summary of the last meeting.

""";Pre-fill templates based on CRM data. Add this to your platform, and users will wonder how they ever lived without it.

Building FAQ and Knowledge Base Bot

Point your bot to an FAQ list. Then prompt:

"Answer the user's question using the knowledge below:\n" +

faqList + "\n\nUser: " + userQuestion;OpenAI will simulate retrieval-based Q&A — even without a vector database like Pinecone.

Later, you can plug in actual embeddings for more precision.

Frequently Asked Questions about Using OpenAI in a Spring Boot Project

Can I use OpenAI’s API with Java and Spring Boot easily?

Yes, you can. OpenAI provides a language-agnostic REST API, which means you can use it with Java and Spring Boot by sending HTTP POST requests with JSON payloads. With tools like RestTemplate or WebClient, the integration becomes smooth and straightforward.

Which OpenAI model should I use for my Java application?

For most use cases, gpt-3.5-turbo is ideal due to its balance between performance and cost. If your application requires more complex understanding, you can opt for gpt-4, but be aware it is more expensive and has different rate limits.

How do I secure my OpenAI API key in a Spring Boot application?

You should store the API key in an environment variable or in application.yml using a placeholder like ${OPENAI_API_KEY}. Never hardcode API keys directly into your Java classes. Spring’s @ConfigurationProperties is a great way to access them securely.

Can I stream OpenAI responses in real-time with Spring Boot?

Yes, you can simulate streaming using ResponseBodyEmitter or implement real SSE (Server-Sent Events) support. While true OpenAI token streaming is more native in Python, Spring Boot can emulate it for user-facing apps quite effectively.

What’s the best way to test OpenAI integration in Java?

Use MockMVC or WebTestClient for controller testing and mock your OpenAI service layer with tools like Mockito. You can also create integration tests with sample prompts and stubbed responses to validate the full flow.

Is there a free version of OpenAI I can use during development?

OpenAI offers a limited number of free credits when you first sign up. After that, it operates on a pay-as-you-go model. You can set spending limits on your account to avoid surprises while testing your Spring Boot integration.

We Want to Hear From You!

At Balian’s, we’re passionate about sharing real-world, practical tech knowledge — especially when it comes to AI, Java, and everything Spring Boot. But now, we want to tailor our next lecture series to what you want to learn most.

Tell us: What AI, coding, or Spring Boot-related topic would you love to see as a lecture or workshop from us?

Whether it’s:

- Building real-time AI assistants

- Using GPT-4 with Java in production

- Prompt engineering for enterprise systems

- Secure deployment of AI services in cloud environments

- Hands-on debugging of AI chat applications

Your voice shapes our future content.

➡ Leave a comment below or message us directly with your topic suggestions.

We’ll review every submission — and yes, your idea might become our next big live lecture or tutorial!

Let’s build better tech, together.

Find us

Balian’s Blogs Balian’s

Balian’s Meduim Balian’s

linkedin Shant Khayalian

Facebook Balian’s

X-platform Balian’s

web Balian’s

Youtube Balian’s

#JavaDevelopment, #SpringBoot, #OpenAI, #ArtificialIntelligence, #AIDevelopment, #GPT3, #GPT4, #APIDevelopment, #ChatGPTAPI, #JavaAI, #BackendDevelopment, #AIBots, #TechEducation, #SoftwareEngineering, #DevCommunity, #ProgrammingTips, #CodeNewbie, #AIIntegration, #FullStackJava, #DeveloperTutorials