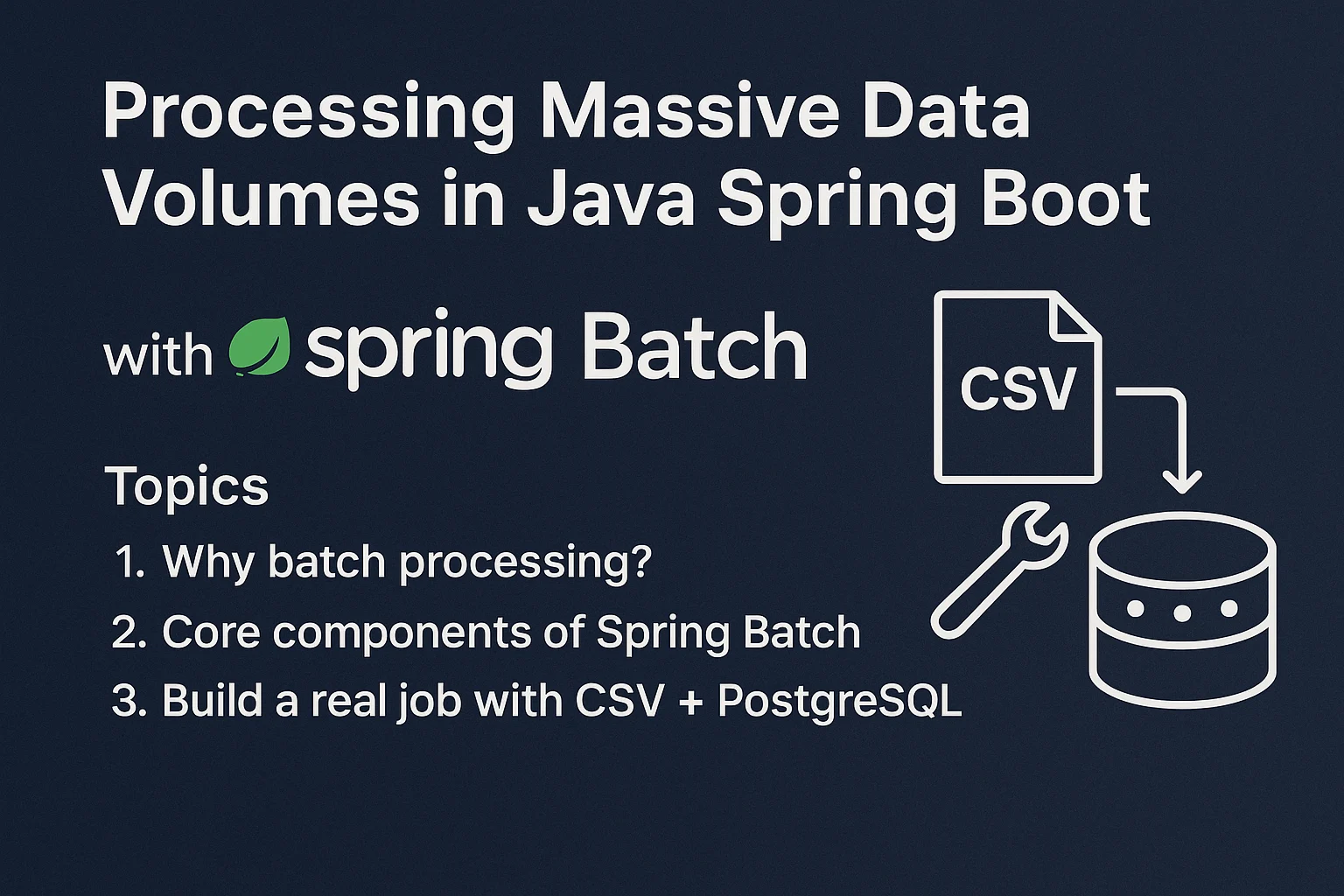

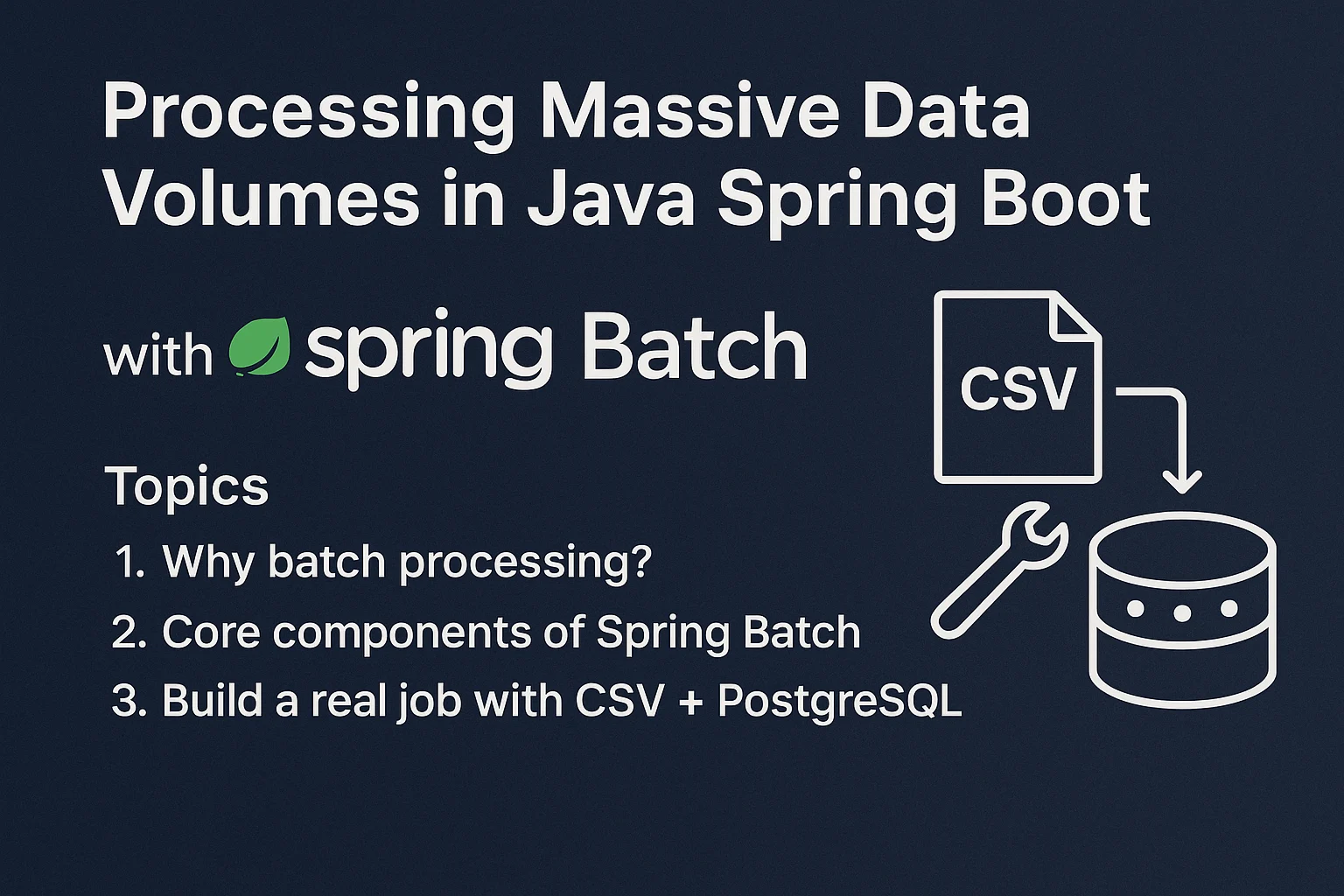

Processing Massive Data Volumes in Java Spring Boot (with Spring Batch)

Views: 0

In modern enterprise systems, data doesn’t just arrive — it floods. When handling massive datasets, especially in back-end infrastructure, Spring Batch offers a rock-solid solution for processing jobs efficiently, safely, and at scale.

What is Spring Batch?

Spring Batch is a lightweight, scalable batch processing framework in the Spring ecosystem. It’s built to support massive data jobs — from reading large files or databases, to transforming and writing the results to storage — all with transactional reliability, monitoring, and concurrency.

In this article, we’ll cover:

Why batch processing matters for large data volumes

Core architecture of Spring Batch

How to build a real job to read a CSV and persist to PostgreSQL

Bonus: Reading bulk data from the database

Let’s get into it!

Why Does Batch Processing Matter?

Processing massive data volumes isn’t just about writing a for-loop.

Batch processing helps:

✔ Improve performance: Handle data in chunks (e.g., 1000 records at once) to minimize I/O and memory overhead

✔ Control resource usage: Schedule jobs off-peak or run in parallel

✔ Ensure fault tolerance: Transactional support and retries on failure

✔ Enable reusability: Break work into steps and configure cleanly

Core Components of Spring Batch

| Component | Description |

|---|---|

| JobLauncher | Starts a batch job |

| Job | Encapsulates the whole batch operation |

| Step | A single task phase: read → process → write |

| ItemReader | Reads from a source (CSV, DB, etc.) |

| ItemProcessor | Transforms data |

| ItemWriter | Writes to a destination |

| JobRepository | Stores job metadata, status, and history |

Real-World Use Case

Let’s say we have a CSV with 10,000 customer records, and we want to:

- Load them into a PostgreSQL database

- Later, extract customer first names for analysis

We’ll do this using Java 21, Spring Boot 3.4.3, and Spring Batch.

pom.xml – Dependencies

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-batch</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<scope>provided</scope>

</dependency>

</dependencies>

Controller – Expose Endpoints for Demo

@RestController

public class ImportController {

private final JobLauncher jobLauncher;

private final Job importJob;

private final Job fetchFirstNamesJob;

public ImportController(JobLauncher jobLauncher,

@Qualifier("importCustomerJob") Job importJob,

@Qualifier("fetchFirstNamesJob") Job fetchFirstNamesJob) {

this.jobLauncher = jobLauncher;

this.importJob = importJob;

this.fetchFirstNamesJob = fetchFirstNamesJob;

}

@GetMapping("/import-customers")

public ResponseEntity<String> importCustomers() {

try {

JobParameters params = new JobParametersBuilder()

.addLong("startAt", System.currentTimeMillis())

.toJobParameters();

jobLauncher.run(importJob, params);

return ResponseEntity.ok("Import triggered!");

} catch (Exception e) {

return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR)

.body("Error: " + e.getMessage());

}

}

@GetMapping("/fetch-firstnames")

public ResponseEntity<String> fetchFirstNames() {

try {

JobParameters params = new JobParametersBuilder()

.addLong("startAt", System.currentTimeMillis())

.toJobParameters();

jobLauncher.run(fetchFirstNamesJob, params);

return ResponseEntity.ok("First names job triggered!");

} catch (Exception e) {

return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR)

.body("Error: " + e.getMessage());

}

}

}

We’re exposing two endpoints: one to load the CSV, another to fetch first names.

Entity Class

@Entity

@Table(name = "customer_info")

@Data

@NoArgsConstructor

@AllArgsConstructor

public class Customer {

@Id

private String customerId;

private String firstName;

private String lastName;

private String company;

private String email;

}

Repository

public interface CustomerRepository extends JpaRepository<Customer, String> {

}

Configuration – Heart of Spring Batch

@Configuration

public class SpringBatchConfig {

@Value("${batch.chunkSize:1000}")

private int chunkSize;

private final CustomerRepository customerRepository;

public SpringBatchConfig(CustomerRepository customerRepository) {

this.customerRepository = customerRepository;

}

// -- JOB 1: Import Customers from CSV --

@Bean

public Job importCustomerJob(JobRepository repo, Step step1) {

return new JobBuilder("importJob", repo)

.start(step1)

.build();

}

@Bean

public Step step1(JobRepository repo, PlatformTransactionManager txManager) {

return new StepBuilder("csv-step", repo)

.<Customer, Customer>chunk(chunkSize, txManager)

.reader(csvReader())

.processor(csvProcessor())

.writer(csvWriter())

.taskExecutor(taskExecutor())

.build();

}

@Bean

@StepScope

public FlatFileItemReader<Customer> csvReader() {

return new FlatFileItemReaderBuilder<Customer>()

.name("csvReader")

.resource(new FileSystemResource("src/main/resources/customers.csv"))

.delimited()

.names("customerId", "firstName", "lastName", "company", "email")

.linesToSkip(1)

.fieldSetMapper(new BeanWrapperFieldSetMapper<>() {{

setTargetType(Customer.class);

}})

.build();

}

@Bean

public ItemProcessor<Customer, Customer> csvProcessor() {

return customer -> customer;

}

@Bean

public RepositoryItemWriter<Customer> csvWriter() {

RepositoryItemWriter<Customer> writer = new RepositoryItemWriter<>();

writer.setRepository(customerRepository);

writer.setMethodName("save");

return writer;

}

// -- JOB 2: Fetch First Names --

@Bean

public Job fetchFirstNamesJob(JobRepository repo, Step step2) {

return new JobBuilder("fetchFirstNamesJob", repo)

.start(step2)

.build();

}

@Bean

public Step step2(JobRepository repo, PlatformTransactionManager txManager) {

return new StepBuilder("fetch-firstnames-step", repo)

.<Customer, String>chunk(chunkSize, txManager)

.reader(firstNamesReader())

.processor(firstNamesProcessor())

.writer(firstNamesWriter())

.taskExecutor(taskExecutor())

.build();

}

@Bean

@StepScope

public RepositoryItemReader<Customer> firstNamesReader() {

RepositoryItemReader<Customer> reader = new RepositoryItemReader<>();

reader.setRepository(customerRepository);

reader.setMethodName("findAll");

reader.setSort(Collections.singletonMap("customerId", Sort.Direction.ASC));

reader.setPageSize(chunkSize);

return reader;

}

@Bean

public ItemProcessor<Customer, String> firstNamesProcessor() {

return Customer::getFirstName;

}

@Bean

public ItemWriter<String> firstNamesWriter() {

return System.out::println;

}

@Bean

public TaskExecutor taskExecutor() {

ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor();

executor.setCorePoolSize(4);

executor.setMaxPoolSize(10);

executor.setThreadNamePrefix("batch-thread-");

executor.initialize();

return executor;

}

}

application.yml

yamlCopyEditspring:

datasource:

url: jdbc:postgresql://localhost:5432/spring_batch_demo

username: your_username

password: your_password

driver-class-name: org.postgresql.Driver

jpa:

hibernate:

ddl-auto: update

show-sql: true

batch:

job:

enabled: false

jdbc:

initialize-schema: always

batch:

chunkSize: 1000

Demo Time

- Start the application

Spring Batch will auto-create job metadata tables in PostgreSQL - Call

/import-customers

Spring Batch will read the CSV and save all records tocustomer_info - Call

/fetch-firstnames

Spring Batch reads the DB and prints customer first names to the console

What Just Happened?

| Part | Tool |

|---|---|

| Source | CSV |

| Target | PostgreSQL |

| Job Framework | Spring Batch |

| Execution | /import-customers, /fetch-firstnames |

| Fault Tolerance | Handled per chunk |

| Scaling | Multi-threaded executor |

Conclusion

Spring Batch gives you powerful tools to handle large-scale data processing:

- Modular architecture

- Transactional chunking

- Metadata tracking

- Multi-threading and restartability

At first glance, Spring Batch may look verbose — but once you’ve set it up, it becomes your go-to engine for data migration, ETL, reporting, and analytics.

Want the full repo?

GitLab – the code

FAQ

What is Spring Batch used for?

Spring Batch is used for developing robust and scalable batch processing applications in Java. It simplifies reading, transforming, and writing large volumes of data efficiently and transactionally, making it ideal for ETL jobs, reporting, and data migrations.

How does chunk processing work in Spring Batch?

Chunk processing in Spring Batch means processing data in manageable pieces (e.g., 1000 records at a time). Each chunk is read, processed, and written as a transaction. This reduces memory usage, increases throughput, and enhances fault tolerance.

Can I use Spring Batch with databases other than PostgreSQL?

Yes, Spring Batch supports a variety of relational databases, including MySQL, Oracle, SQL Server, and H2. You just need to configure the appropriate DataSource and JDBC settings in your application.yml or application.properties.

Is Spring Batch suitable for real-time processing?

Spring Batch is primarily designed for batch (non-real-time) operations. For real-time data handling, consider combining it with Spring Integration or Spring Cloud Data Flow to achieve hybrid solutions.

How does Spring Batch handle job failures or restarts?

Spring Batch provides built-in metadata tracking and a restart mechanism. If a job fails, it can resume from the last successful step or chunk, ensuring data consistency and minimizing data loss or duplication.

Is Spring Batch scalable for large enterprise applications?

Absolutely. Spring Batch supports parallel step execution, partitioning, and multi-threaded chunk processing. These features make it highly scalable and capable of handling millions of records in high-load environments.

Find us

Balian’s Blog

LinkedIn Shant Khayalian

Facebook Balian’s

Web Balian’s

YouTube Balian’s

Java #SpringBoot #SpringBatch #JavaDeveloper #Java21 #SpringFramework #BackendDevelopment #DataProcessing #BigData #BatchProcessing #ScalableArchitecture #EnterpriseSoftware #HighVolumeData #DevCommunity #CodeNewbie #SoftwareEngineering #100DaysOfCode #CleanCode #TechWriting #BuiltWithSpring #BobTheDevRel #DevWithBob